I am a Ph.D. researcher in the Deep Learning Lab at West Virginia University, under the guidance of Prof. Nasser Nasrabadi. My research focuses on developing advanced machine learning algorithms for applications in computer vision and biometrics. With over seven years of research experience, I specialize in areas including multimodal foundation models, facial representation learning, cross-modal face recognition, face retrieval, and continual learning. My work has been published in esteemed venues such as 2 x WACV, TBIOM, TAES, and IJCB.

As I approach the final year of my Ph.D. in 2025, I am actively seeking opportunities for research internships and visiting student positions..

If you have any questions of my work, please feel free to email me at mh00062@mix.wvu.edu.

🔥 News

- 2024.09: 🎉 Our paper published at WACV 2025.

- 2024.09: 🎉 Our journal published at IEEE Transactions on Biometrics, Behavior, and Identity Science (TBIOM).

- 2023.12: 🎉 Our journal published at IEEE Transactions on Aerospace and Electronic Systems (TAES).

- 2023.10: 🎉 Our paper published at WACV 2024.

- 2023.06: 🎉 One paper published at IJCB 2023.

- 2023.04: Attended EAB and CITeR Biometrics Workshop in Martigny, Switzerland, organized by European Association for Biometrics (EAB) in collaboration with the Center for Identification Technology Research (CITeR) and IDIAP research institute to present two progress report and one final project report.

- 2023.01: 🎉 One journal published at IET Biometrics journal.

- 2022.11 I received the best poster award in CITer Fall Program Review

- 2022.11 🔥 Awarded a research grant from CITer for our new project (Project #22F-01W), A Perpetual Deep Face Recognition System, which aims to build a dynamic deep learning model that continually learns new FR tasks.

- 2022.04 🔥 Awarded a research grant from CITer for our new project (Project #22S-06W), One-to-One Face Recognition with Human Examiner in the Loop, which aims to improve the performance of a FR system with human examiner in the loop.

- 2021.06: I joined the Deep Learning Lab at WVU to work under Prof. Nasser Nasrabadi as a PhD researcher!

- 2021.04: 🎉 Our journal published at IET Computer Vision.

- 2020.09: 🔥 I defended my M.Sc. thesis at IICT, BUET. Thanks to Dr. Hossen Asiful Mustafa for your invaluable supervision.

📖 Educations

2021.16 -

- PhD in Computer Engineering, West Virginia University (WVU)

- Advisor: Dr. Nasser M. Nasrabadi, Professor, LCSEE, WVU

2014.10 - 2020.09

- M.Sc. in Information and Communication Technology (ICT), Bangladesh University of Engineering and Technology (BUET)

- Thesis: View Invariant Gait Recognition for Person Re-Identification in a Multi Surveillance Camera Environment [HTML], [Code]

- Advisor: Dr. Hossen Asiful Mustafa, Professor, IICT, BUET

2008.12 - 2013.09

- B.Sc. in Electrical and Electronic Engineering, Khulna University of Engineering and Technology (KUET)

💻 Professional Experience

- *2021.06 - *, Graduate researcher at West Virginia University (WVU)

- 2021.06 - 2023.08, Graduate researcher at Center for Identification Technology Research (CITer)

- 2019.04 - 2021.06, Teaching Faculty at Manarat International University (MIU), Department of Computer Science and Engineering (CSE)

📝 Publications

🧑🎨 Face Recognition

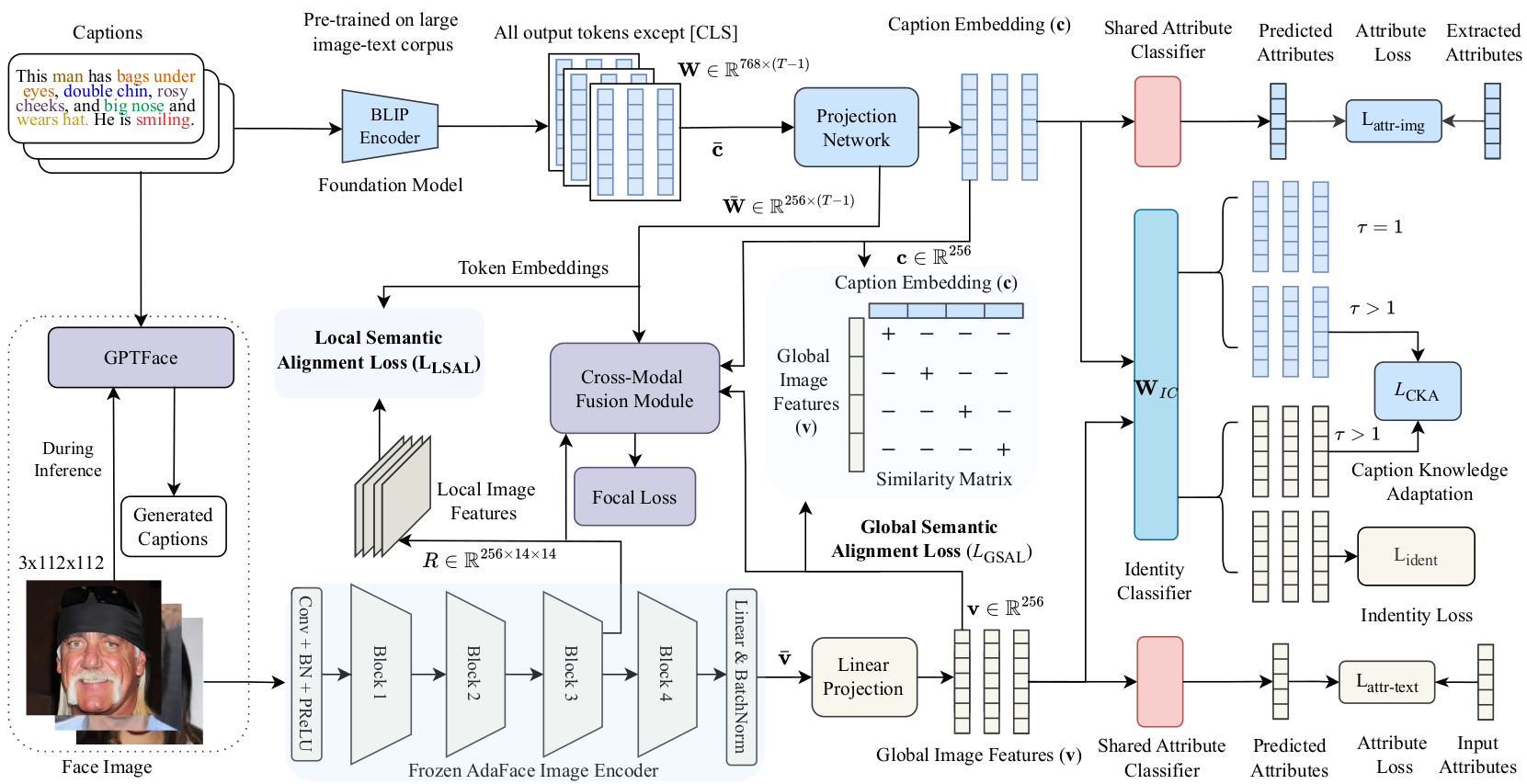

Learning Multi-Scale Knowledge-Guided Features for Text-Guided Face Recognition

IEEE Transactions on Biometrics, Behavior, and Identity Science (TBIOM)

Md Mahedi Hasan, Shoaib Meraj Sami, Nasser Nasrabadi, and Jeremy Dawson [HTML] [PDF] [CODE]

Abstract

Text-guided face recognition (TGFR) aims to improve the performance of state-of-the-art face recognition (FR) algorithms by incorporating auxiliary information, such as distinct facial marks and attributes, provided as natural language descriptions. Current TGFR algorithms have been proven to be highly effective in addressing performance drops in state-of-the-art FR models, particularly in scenarios involving sensor noise, low resolution, and turbulence effects. Although existing methods explore various algorithms using different cross-modal alignment and fusion techniques, they encounter practical limitations in real-world applications. For example, during inference, textual descriptions associated with face images may be missing, lacking crucial details, or incorrect. Furthermore, the presence of inherent modality heterogeneity poses a significant challenge in achieving effective cross-modal alignment. To address these challenges, we introduce CaptionFace, a TGFR framework that integrates GPTFace, a face image captioning model designed to generate context-rich natural language descriptions from low-resolution facial images. By leveraging GPTFace, we overcome the issue of missing textual descriptions, expanding the applicability of CaptionFace to single-modal FR datasets. Additionally, we introduce a multi-scale feature alignment (MSFA) module to ensure semantic alignment between face-caption pairs at different granularities. Furthermore, we introduce an attribute-aware loss and perform knowledge adaptation to specifically adapt textual knowledge from facial features. Extensive experiments on three face-caption datasets and various unconstrained single-modal benchmark datasets demonstrate that CaptionFace significantly outperforms state-of-the-art FR models and existing TGFR approaches.

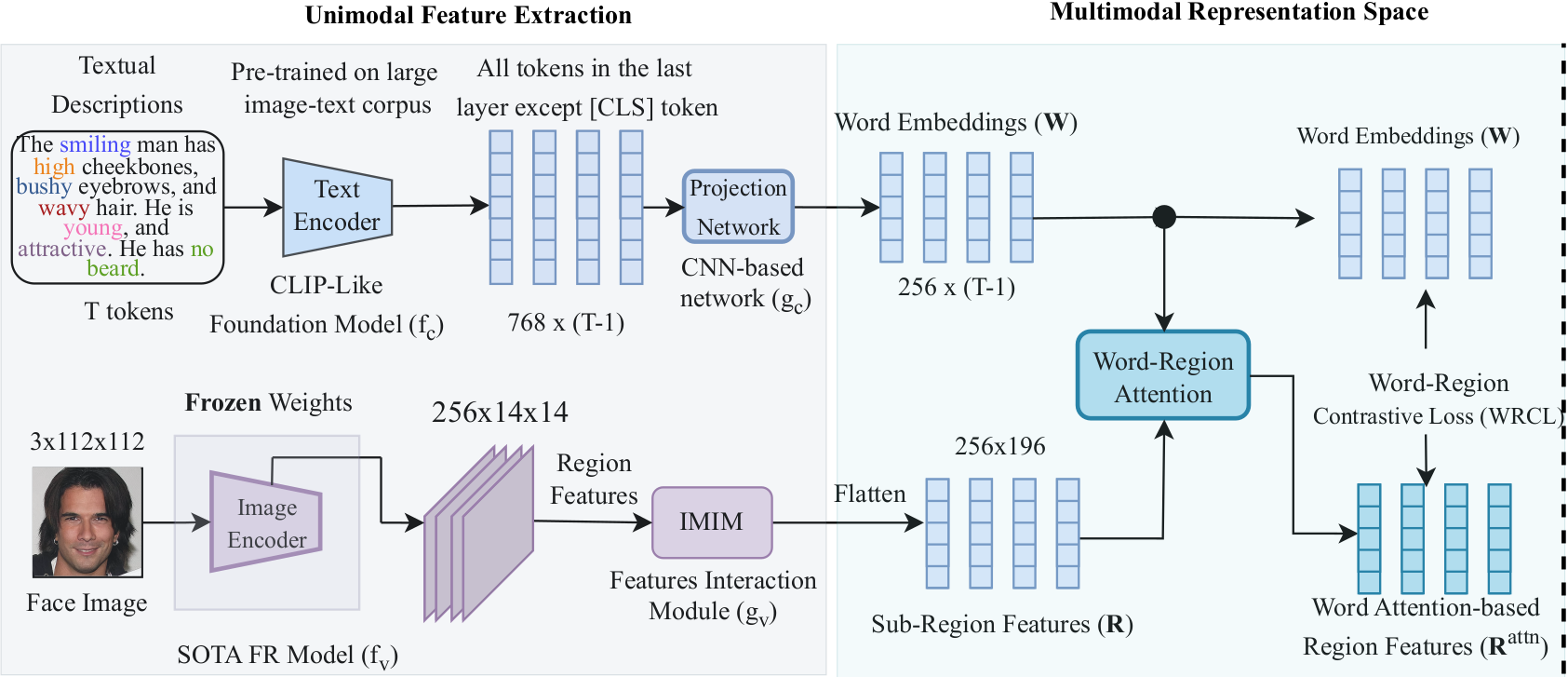

Text-Guided Face Recognition using Multi-Granularity Cross-Modal Contrastive Learning

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2024)

Md Mahedi Hasan, Shoaib Meraj Sami, and Nasser Nasrabadi. [HTML] [PDF] [Video]

Abstract

State-of-the-art face recognition (FR) models often experience a significant performance drop when dealing with facial images in surveillance scenarios where images are in low quality and often corrupted with noise. Leveraging facial characteristics, such as freckles, scars, gender, and ethnicity, becomes highly beneficial in improving FR performance in such scenarios. In this paper, we introduce text-guided face recognition (TGFR) to analyze the impact of integrating facial attributes in the form of natural language descriptions. We hypothesize that adding semantic information into the loop can significantly improve the image understanding capability of an FR algorithm compared to other soft biometrics. However, learning a discriminative joint embedding within the multimodal space poses a considerable challenge due to the semantic gap in the unaligned image-text representations, along with the complexities arising from ambiguous and incoherent textual descriptions of the face. To address these challenges, we introduce a face-caption alignment module (FCAM), which incorporates cross-modal contrastive losses across multiple granularities to maximize the mutual information between local and global features of the face-caption pair. Within FCAM, we refine both facial and textual features for learning aligned and discriminative features. We also design a face-caption fusion module (FCFM) that applies fine-grained interactions and coarse-grained associations among cross-modal features. Through extensive experiments conducted on three face-caption datasets, proposed TGFR demonstrates remarkable improvements, particularly on low-quality images, over existing FR models and outperforms other related methods and benchmarks.

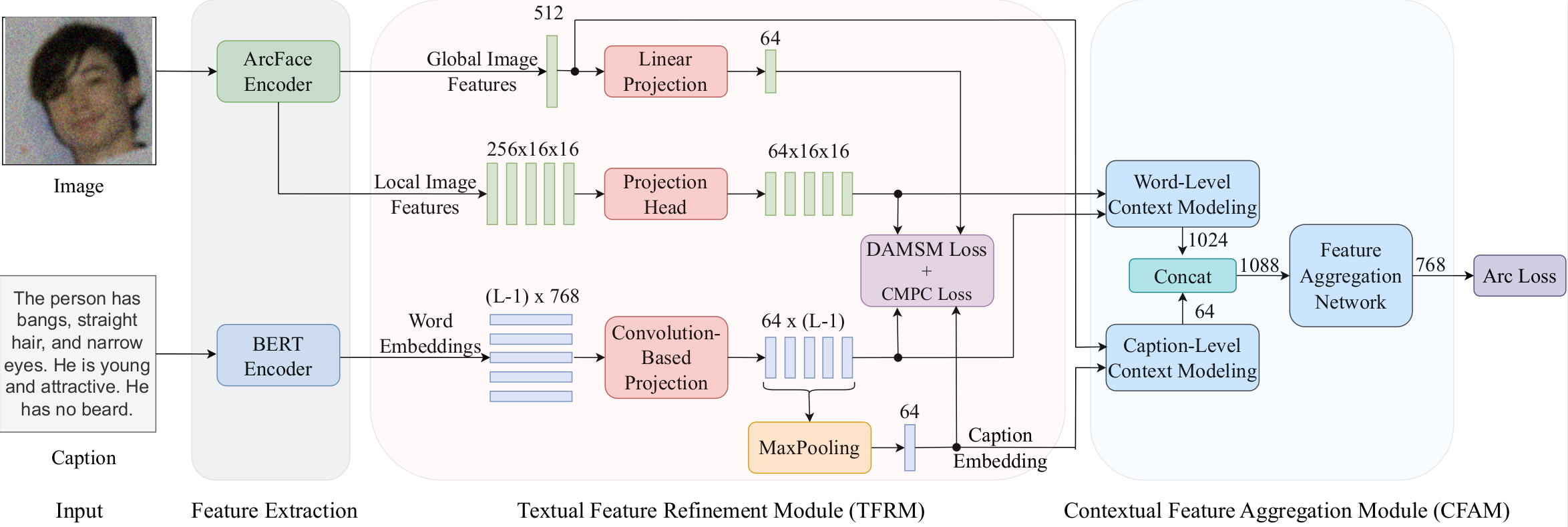

Improving Face Recognition from Caption Supervision with Multi-Granular Contextual Feature Aggregation

2023 IEEE International Joint Conference on Biometrics (IJCB 2023)

Md Mahedi Hasan, and Nasser Nasrabadi. [HTML] [PDF] [CODE]

Abstract

We introduce caption-guided face recognition (CGFR) as a new framework to improve the performance of commercial-off-the-shelf (COTS) face recognition (FR) systems. In contrast to combining soft biometrics (eg., facial marks, gender, and age) with face images, in this work, we use facial descriptions provided by face examiners as a piece of auxiliary information. However, due to the heterogeneity of the modalities, improving the performance by directly fusing the textual and facial features is very challenging, as both lie in different embedding spaces. In this paper, we propose a contextual feature aggregation module (CFAM) that addresses this issue by effectively exploiting the fine-grained word-region interaction and global image-caption association. Specifically, CFAM adopts a self-attention and a cross-attention scheme for improving the intra-modality and inter-modality relationship between the image and textual features, respectively. Additionally, we design a textual feature refinement module (TFRM) that refines the textual features of the pre-trained BERT encoder by updating the contextual embeddings. This module enhances the discriminative power of textual features with a cross-modal projection loss and realigns the word and caption embeddings with visual features by incorporating a visual-semantic alignment loss. We implemented the proposed CGFR framework on two face recognition models (ArcFace and AdaFace) and evaluated its performance on the Multi-Modal CelebA-HQ dataset. Our framework significantly improves the performance of ArcFace in both 1:1 verification and 1:N identification protocol.🧑🎨 Continual Learning

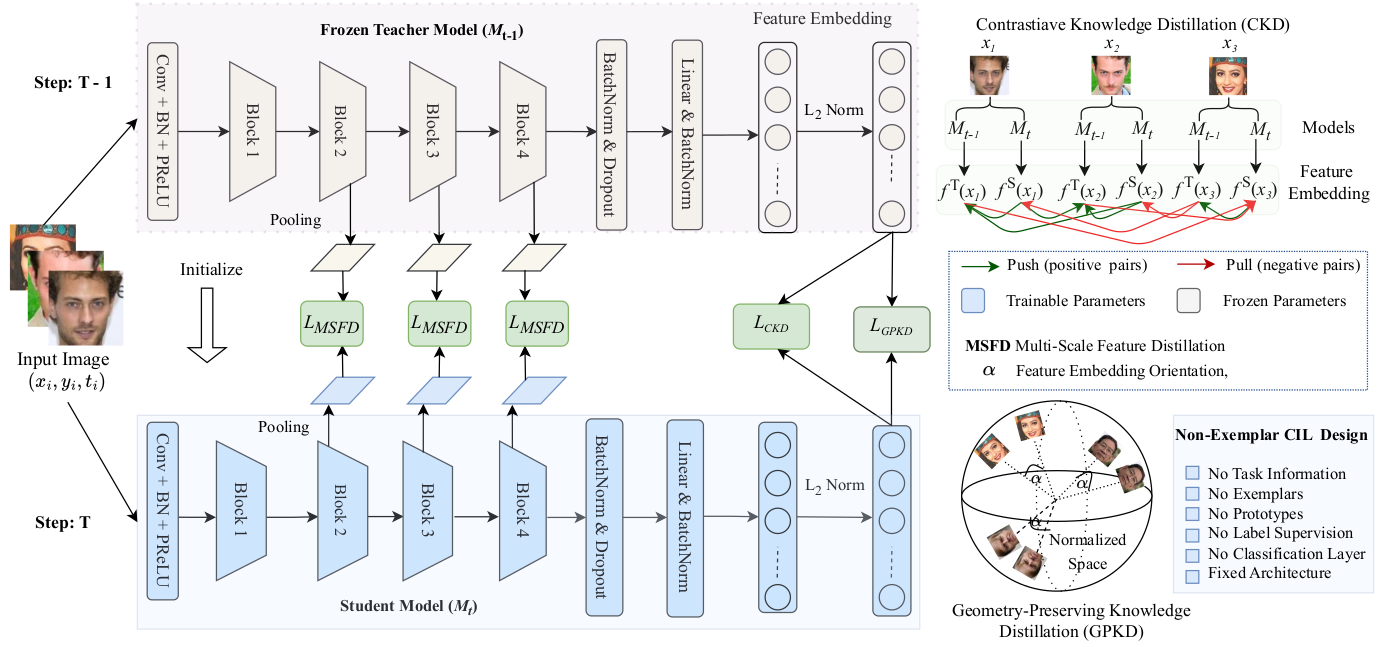

CLFace: A Scalable and Resource-Efficient Continual Learning Framework for Lifelong Face Recognition

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2025)

Md Mahedi Hasan, Shoaib Meraj Sami, Nasser Nasrabadi, and Jeremy Dawson [Arxiv] [CODE]

Abstract

An important aspect of deploying face recognition (FR) algorithms in real-world applications is their ability to learn new face identities from a continuous data stream. However, the online training of existing deep neural network-based FR algorithms, which are pre-trained offline on large-scale stationary datasets, encounter two major challenges: 1. catastrophic forgetting of previously learned identities, and 2. the need to store past data for complete retraining from scratch, leading to significant storage constraints and privacy concerns. In this paper, we introduce CLFace, a continual learning framework designed to preserve and incrementally extend the learned knowledge. CLFace eliminates the classification layer, resulting in a resource-efficient FR model that remains fixed throughout lifelong learning and provides label-free supervision to a student model, making it suitable for open-set face recognition during incremental steps. We introduce an objective function that employs feature-level distillation to reduce drift between feature maps of the student and teacher models across multiple stages. Additionally, it incorporates a geometry-preserving distillation scheme to maintain the orientation of the teacher model's feature embedding. Furthermore, a contrastive knowledge distillation is incorporated to continually enhance the discriminative power of the feature representation by matching similarities between new identities. Experiments on several benchmark FR datasets demonstrate that CLFace outperforms baseline approaches and state-of-the-art methods on unseen identities using both in-domain and out-of-domain datasets.🎼 Automatic Target Recognition

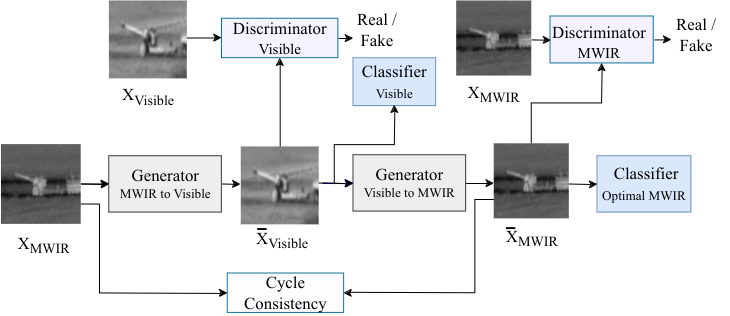

Contrastive Learning and Cycle Consistency-Based Transductive Transfer Learning for Target Annotation

IEEE Transactions on Aerospace and Electronic Systems (TAES 2024)

Shoaib Meraj Sami, Md Mahedi Hasan, Nasser Nasrabadi, Raghuveer Rao [HTML]

Abstract

Annotating automatic target recognition (ATR) is a highly challenging task, primarily due to the unavailability of labeled data in the target domain. Hence, it is essential to construct an optimal target domain classifier by utilizing the labeled information of the source domain images. The transductive transfer learning (TTL) method that incorporates a CycleGAN-based unpaired domain translation network has been previously proposed in the literature for effective ATR annotation. Although this method demonstrates great potential for ATR, it severely suffers from lower annotation performance, higher Fréchet inception distance (FID) score, and the presence of visual artifacts in the synthetic images. To address these issues, we propose a hybrid contrastive learning base unpaired domain translation (H-CUT) network that achieves a significantly lower FID score. It incorporates both attention and entropy to emphasize the domain-specific region, a noisy feature mixup module to generate high variational synthetic negative patches, and a modulated noise contrastive estimation (MoNCE) loss to reweight all negative patches using optimal transport for better performance. Our proposed contrastive learning and cycle-consistency-based TTL (C3TTL) framework consists of two H-CUT networks and two classifiers. It simultaneously optimizes cycle-consistency, MoNCE, and identity losses. In C3TTL, two H-CUT networks have been employed through a bijection mapping to feed the reconstructed source domain images into a pretrained classifier to guide the optimal target domain classifier. Extensive experimental analysis conducted on six ATR datasets demonstrates that the proposed C3TTL method is effective in annotating civilian and military vehicles, ships, planes, and human targets.📚 Fingerprint Recognition

IET Biometrics 2023On Improving Interoperability for Cross-Domain Multi-Finger Fingerprint Matching Using Coupled Adversarial Learning, Md Mahedi Hasan, Nasser Nasrabadi, and Jeremy Dawson [HTML] [PDF]BIOSIG 2022[Oral] Deep Coupled GAN-Based Score-Level Fusion for Multi-Finger Contact to Contactless Fingerprint Matching, Md Mahedi Hasan, Nasser Nasrabadi, and Jeremy Dawson [HTML] [PDF] [CODE]

🎼 Gait Recognition

IET Computer Vision 2021Learning view-invariant features using stacked autoencoder for skeleton-based gait recognition, Md Mahedi Hasan, and Hossen Asiful Mustafa. [HTML] [PDF]IJCSIS 2021Multi-level feature fusion for robust pose-based gait recognition using RNN, Md Mahedi Hasan, and Hossen Asiful Mustafa. [PDF]

Others

ETCCE 2021A deep Spatio-temporal network for vision-based sexual harassment detection, Md Shamimul Islam, Md Mahedi Hasan, Sohaib Abdullah, et al.Book Chapter 2020Deep Learning based Early Detection and Grading of Diabetic Retinopathy Using Retinal Fundus Images, Sheikh Muhammad Saiful Islam, Md Mahedi Hasan, Sohaib Abdullah arXivRAAICON 2019Robust Pose-Based Human Fall Detection using Recurrent Neural Network, Md Mahedi Hasan, Md Shamimul Islam, Sohaib AbdullahICBSLP 2019Aibangla: A Benchmark Dataset for Isolated Bangla Handwritten Basic and Compound Character Recognition, Md Mahedi Hasan, Mahathir Mohammad Abir, et al. [dataset]ICBSLP 2018YOLO-Based Three-Stage Network for Bangla License Plate Recognition in Dhaka Metropolitan City, Sohaib Abdullah, Md Mahedi Hasan, Sheikh Muhammad Saiful Islam [code], [dataset]ICCIT 2018DEEPGONET: Multi-label Prediction of GO Annotation for Protein from Sequence Using Cascaded Convolutional and Recurrent Network, Sheikh Muhammad Saiful Islam, Md Mahedi Hasan

📝 Research Grants

Multi-Finger Contactless Fingerprint Matching

- PI Name: Jeremy M. Dawson, Nasser M. Nasrabadi

- Name of Funding Organization: CITer (Project #21S-04W), IUCRC - NSF

- Period of Grant Award: 1 Year (08/13/2021 - 08/12/2022)

- Amount: $50,000

- Project Title: Evaluation of the Performance of Multi-Finger Contactless Fingerprint Matching

-

My Role in the Project: I developed an algorithm for multi-finger contactless fingerprint matching. I successfully achieved all project milestones under the supervision of the PIs. I presented the final report and webinar, along with publishing two academic papers based on the experimental results.

One-to-One Face Recognition

- PI Name: Nasser M. Nasrabadi, Jeremy M. Dawson

- Name of Funding Organization: CITer (Project #22S-06W), IUCRC - NSF

- Period of Grant Award: 1 Year (11/05/2022 - 04/21/2023)

- Amount: $50,000

- Project Title: One-to-One Face Recognition with Human Examiner in the Loop

-

My Role in the Project: I developed a text-guided face recognition (FR) system to improve the performance of state-of-the-art FR algorithms by integrating facial attributes through natural language descriptions. I successfully met all project milestones under the supervision of the PIs. I presented both the progress report and the final report, and additionally published two academic papers.

Deep Face Recognition

- PI Name: Nasser M. Nasrabadi, Md Mahedi Hasan

- Name of Funding Organization: CITer (Project #22F-01W), IUCRC - NSF

- Period of Grant Award: 1 Year (09/03/2022 - 10/25/2023)

- Amount: $50,000

- Project Title: A Perpetual Deep Face Recognition System

-

My Role in the Project: I wrote the proposal with Prof. Nasser. I designed the class-incremental learning framework which can learn and improve from a sequence of face recognition tasks without storing any exemplar sets. I successfully completed all the project milestones. I presented the progress and the final report.

📝 Research Projects

License Plate Recognition

- Project Title: Real-time Automatic Bangla License Plate Detection and Recognition

- Period: 1 Year (05/01/2018 - 04/30/2019)

- Description: We have developed a real-time automatic Bangla license plate recognition system based on YOLO-v3. Additionally, we curated a dataset comprising 1,500 diverse images of Bangladeshi vehicular license plates. These images were manually captured from streets, simulating various real-world scenarios. This project is funded by department of CSE, Manarat International University (MIU).

- Resources: [dataset], [paper]

Bangla Handwritten Character Recognition

- Project Title: Deep Isolated Bangla Handwritten Basic and Compound Character Recognition

- Period: 1 Year (01/12/2018 - 12/30/2018)

- Description: We present AIBangla, a new benchmark image database for isolated handwritten Bangla characters with detailed usage and a performance baseline. Our dataset contains 80,403 hand-written images on 50 Bangla basic characters and 249,911 hand-written images on 171 Bangla compound characters which were written by more than 2,000 unique writers from various institutes across Bangladesh. This project is funded by department of CSE, Manarat International University (MIU)

- Resources: [dataset], [paper]

Bangla Sign Language Recognition

- Project Title: Deep Bangla Sign Language Recognition from Video: A New Large-scale Dataset

- Period: 2 Year (11/05/2021 - 04/05/2023)

- Description: We have developed an attention-based Bi-GRU model that captures the temporal dynamics of pose information used by individuals communicating through sign language. Furthermore, we created a large-scale dataset called the MVBSL-W50, which comprises 50 isolated words across 13 categories.

- Resources: [dataset], [paper]

🎖 Awards

- 2022.11 Best Poster Award in CITer Fall Program Review

- 2009-2012 University Merit Scholarship (Undergraduate) (All eight terms of undergraduate study from the Govt. of the People’s Republic of Bangladesh)

💬 Reviewing

- Reviewer at IEEE/CVF Conference on Computer Vision and Pattern Recognition

- Reviewer at IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)

- Reviewer at IEEE Transactions on Automation Science and Engineering

- Reviewer at IEEE Transactions on Circuits and Systems for Video Technology

- Reviewer at IEEE Transactions on Power Delivery

- Reviewr at Engineering Applications of Artificial Intelligence, ELSEVIER

- Reviewr at Pattern recognition

💬 Teaching

2019.04 - 2021.05

- Teaching Faculty at Manarat International University (MIU), Department of Computer Science and Engineering (CSE)

- Responsibilities: Conducting undergraduate classes

- CSE-437: Computer Vision and Robotics [Spring 2019], [Fall 2019]

- CSE-411: Artificial Intelligence [Summer 2019]

- CSE-433: Neural Networks and Fuzzy Systems [Fall 2019]

- Supervising undergraduate student research and projects

💬 Affiliations

2015.03 - 2021.05

- Assistant Editor of Byapon Science Magazine

- Bi-monthly youth science magazine, Printed and circulated nationwide more than 15,000 copies per issue

- Office: 48/1, Motijheel C/A, Dhaka-1200, Bangladesh

2023.07 - Present

- IEEE Student Member

💬 Invited Talks

- 2023.12, The Engine Behind ChatGPT. Cognitive Mind Webinar, Dhaka, Bangladesh. [video]

- 2019.11, Deep Learning in Computer Vision. Google Developer Group, Sonargaon, Dhaka, Bangladesh. [video]

- 2020.03, Gait Recognition. CSE Fest 2020, MIU, Dhaka, Bangladesh (March 2020)